Efficiency is paramount when it comes to data analytics, but as datasets grow in size and complexity, ensuring smooth operations becomes increasingly challenging.

We recently faced this challenge with sporadic refresh failures for a customer's Production-level Power BI semantic model. The model had grown in size and was nudging against the 12GB limit of their current Power BI Premium P1 capacity.

We explored various potential solutions, from shrinking semantic model size to beefing up gateway CPU and Power BI capacity. However, these approaches hit a wall due to the demanding requirements of the dataset and the substantial increase in costs.

So how did we succeed? By using the Power BI Best Practice Analyzer!

Enter the Best Practice Analyzer!

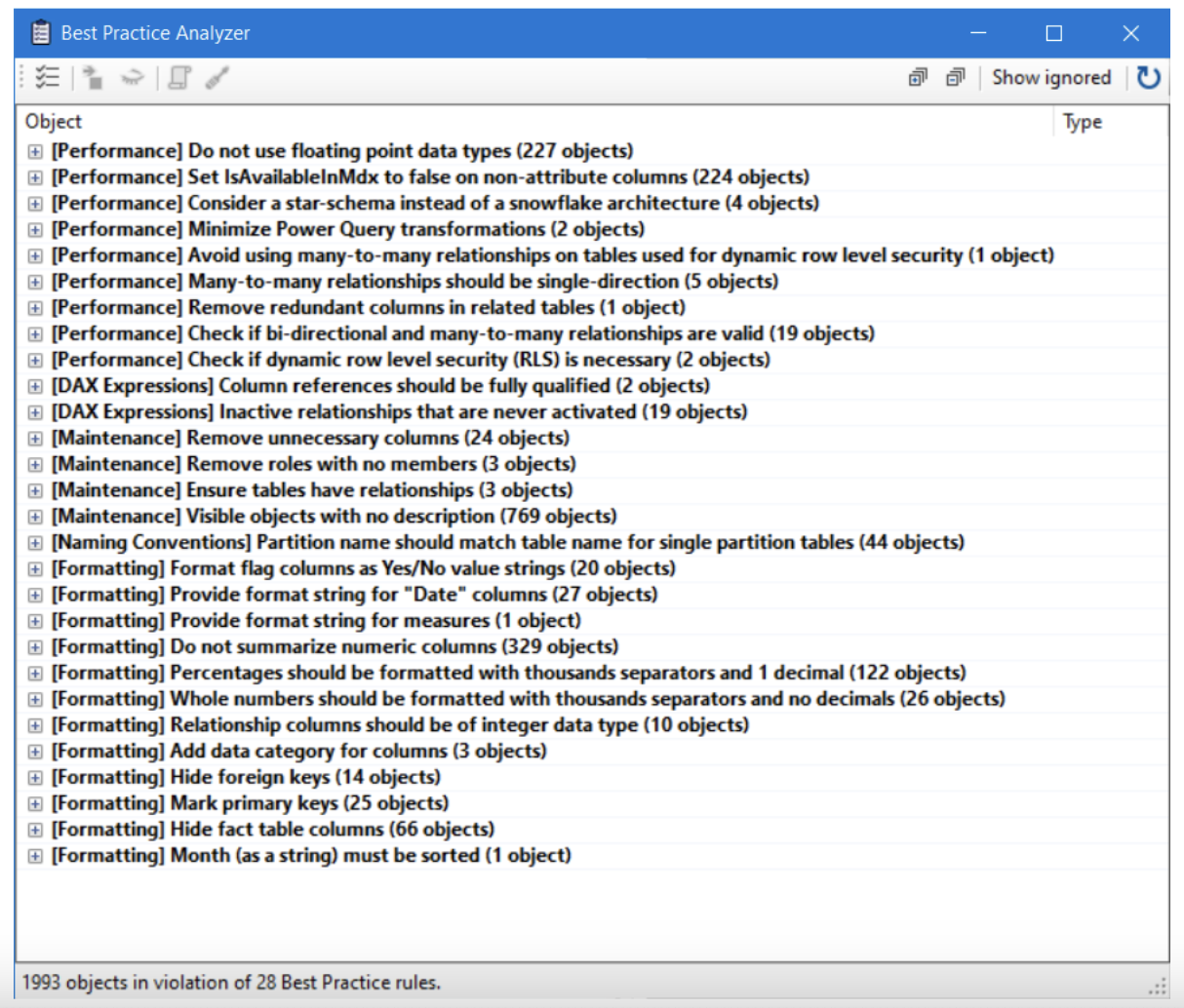

By using tools like DAX Studio and Tabular Editor, we were able to diagnose and rectify performance bottlenecks in Power BI data models. The Best Practice Analyzer is a tool available within Tabular Editor which notifies you of potential modelling missteps or changes which can be made to improve the model design and performance. It's worth noting that the Best Practice Analyzer offers default rules which we utilised in this example, but also allows for customisation, enabling users to select a tailored set of rules or even add their own based on specific business needs.

Our investigation

We then conducted the investigation with the Development version of the semantic model, which within the Power BI service currently sits at 7.2GB:

After running the Best Practice Analyzer script, the following issues were raised:

The issues raised have been split into various categories i.e. Performance, Maintenance, Formatting etc. A neat feature of the tool is being able to automatically tackle some of the issues raised by right-clicking and selecting ‘Apply Fix’.

The results

After applying a selection of the changes which were relevant to our use case, our semantic model size has reduced considerably:

That’s a 21% reduction in semantic model size! Implementing similar adjustments in our production semantic model would ensure a more dependable refresh, as we’d be steering clear of the Power BI Premium P1 capacity limits.

Informed decision and harnessing potential

While optimising Power BI semantic models is fraught with challenges and tradeoffs, tools like the Best Practice Analyzer enable us to accelerate our development and performance tuning, helping us make informed decisions and unlock the true potential of our data models. This ensures seamless performance and enhances productivity. In Part 2, we'll look at the underlying logic of Power BI to understand why the fixes recommended by the Best Practice Analyzer are so impactful.

Note: While the proposal discussed may help enhance performance, it's essential to thoroughly test and evaluate its impact within your specific environment, considering factors like user requirements and operational constraints.

Our recent tech blog posts

Transformation is for everyone. We love sharing our thoughts, approaches, learning and research all gained from the work we do.

A guide to Regularly Recurring Reporting Panics

How can you tell your data architecture is rotten? One of the surest signs is RRRPs.

Read more

Strategies for robust GenAI evaluation

Our approach to quality assurance of generative AI applications

Read more